AI, security, and privacy - what happens to the messages sent to ChatGPT?

AI and ChatGPT.

It's something we can't avoid today - it's the future! And we predict that it's something we'll see much more of. As you read this, new developments are already happening in the field. But what does it mean for your security and privacy when robots become so smart?

We hear about it everywhere - both positively and negatively, and we're asked to engage with these tools. You've probably already experimented with ChatGPT or some of the many other AI tools and models. But have you thought about what happens to all the content you share and create through these tools? Who has access to the information, and can others exploit it for their own benefit?

Where there are opportunities, there are also pitfalls, and criminals are quick to react to them. So, what about them? What AI tools do they have right now that can assist them in their illicit activities?

Where does my prompt/message end up?

To answer the question, we need to delve into a specific tool. In this case, we choose to take a closer look at ChatGPT - especially because ChatGPT is currently the most widespread AI service. However, the same principles being explained apply to many of the other AI services. So, the question should instead be: Where does my prompt/message end up when I ask ChatGPT something?

When you interact with ChatGPT, you input a message, which can be a specific question, and press send. The message then goes into ChatGPT's AI/model, where it is processed so the model can send a response back to you. If you want to learn more about how the entire process works, take a look at one of our previous blog posts here.

The message and, in general, the correspondence you have with ChatGPT are stored by OpenAI, the company behind ChatGPT. The message ends up in their massive data repository, where it is used to further train ChatGPT.

When you interact with ChatGPT, your entire thread is used to make the model smarter. Therefore, ChatGPT constantly becomes much smarter and faster.

"But what's the problem with that?" you might be thinking. Isn't it an advantage that the next version becomes smarter and can provide us with even better and more accurate answers? In that way, ChatGPT can become much more relevant in various contexts. And yes, ChatGPT becomes smarter, allowing you to leverage it more in your daily life and work.

However, it will also be aware of your questions, follow-up questions, and the answers that have been given. If there has been a 'conversation' of a more personal nature, a person with malicious intent could prompt the new version and indirectly gain knowledge about you. This is what is referred to as 'prompt injecting.'

"But come on, relax. I have nothing to hide, so why does it matter to me?" you might be asking now. And here, we can agree with you. Many of the conversations held with ChatGPT have absolutely no impact on your privacy. And even if you've asked about more personal matters - like illness and symptoms - many others may have the same ailment. "And I haven't even given my name..." So, is it really important to you?

A hypothetical example could be using ChatGPT at your workplace. Here, you can unintentionally leak important information about your work, your workplace, and perhaps the customers you deal with. And oops, we have a problem! This means that, without knowing it, you can inadvertently leak critical data. And the problem becomes even greater if you are in a position like a programmer or have anything to do with coding in general.

The issues associated with writing code

There can be many issues associated with writing code and seeking help from ChatGPT. Because even though it may seem incredibly smart and can also streamline your work to use ChatGPT for coding, especially when combined with ChatGPT functions, you need to be particularly cautious.

Let's take a closer look at some interesting scenarios (and remember the knowledge you've gained regarding where the messages we send to ChatGPT end up):

Passwords

Much of the code today needs to be able to communicate with some form of service. To succeed in doing so, a login is required, which in most cases is both long and complicated password.

If you ask ChatGPT to write some code for you that can communicate with one or more of these services, you can quickly end up sending your password along with it. It will then be leaked and ready for use in the next version of ChatGPT, where it can be freely accessed by other users.

Business secrets

In the context of your work, you may encounter questions or doubts about your code. Seeking help from ChatGPT can be ideal in such situations. To make the process as efficient as possible, it might seem easy to copy your entire code into the prompt to get a quick and accurate response.

The mistake here is that the code somewhere may contain confidential data. It could be, for instance, in the form of business logic. ChatGPT would then become aware of this data, it would be trained on the logic, and in the next version, it could be available for free use by other users.

Errors in code

As you've probably already noticed, you need to be quite precise in the message you send to ChatGPT if you want to ensure a response that actually makes sense. And when it comes to code, you need to be even more careful.

This also applies to the response you receive. The code you've likely had adjusted to your preferences can easily contain errors because ChatGPT doesn't have knowledge of the context of your code. And maybe you don't either, which makes it difficult to describe 100% what you want in the prompt.

ChatGPT is trained on general and basic code that can be found on the internet. And there's no guarantee that everything available online is entirely error-free.

Malicious Code

ChatGPT requires a vast number of different examples of code before the model can generate code that actually works as intended. Therefore, OpenAI gathers a wide range of open-source projects and code forums for ChatGPT to be trained on.

Here, we can only hope that projects with some vetting are chosen - this could include, for example, the number of stars on GitHub.

In the public domain, there are also many examples of code for things like malware that the good guys can study (of course, the bad guys are also watching). However, this means that much code contains errors, and some code can even be insecure - even if it may not be immediately apparent. When you ask ChatGPT to write some code, you should therefore be prepared for a high margin of error.

Today, we also experience that many development tools allow you to use AI to generate code. Often, though, you have the option to control whether the specific code can be used for training - this feature may require you to pay a small fee, as is the case with Github's Copilot.

Regardless of the scenario, the advice remains the same. Be cautious with the code you provide to different systems, make sure to thoroughly review the code you receive in return, and understand it before using it.

What can I do to protect my information?

Now, you probably want to know what you should do to ensure that your information doesn't end up in the wrong hands. Fortunately, there are two methods you can use:

-

Avoid sharing information that shouldn't be public with ChatGPT and other AI tools and models.

-

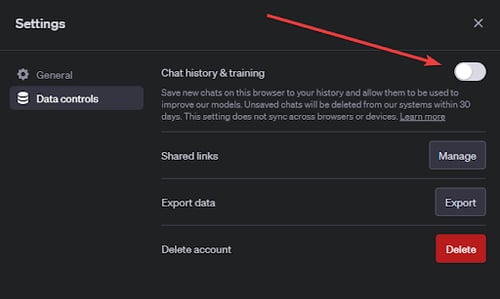

Make sure to disable history and your consent for ChatGPT to use your messages for training. You can keep it turned off all the time, or you can choose to turn it off only when you know you're about to share some information that shouldn't be stored or known by others.

If you are using ChatGPT, there is also an Enterprise version available. This version places a higher emphasis on security and privacy.

Many of the other AI services offer similar functionality.

If you use ChatGPT to generate code, you can take advantage of the same feature. However, it won't solve the problem of poor or malicious code. In this case, there is no other way than to ensure that you thoroughly review your code and understand it 100% before using it for a real purpose.

To be entirely on the safe side, you can also have another programmer or someone with coding expertise review your code for you or alongside you. Remember that, in the end, you are responsible for the code you choose to use. Therefore, you are also accountable if there are errors or vulnerabilities in the code that can be leaked or disrupt your environment.

Dark web AI's

As we mentioned earlier, where there are opportunities, there are always pitfalls, unfortunately. Cybercriminals have naturally entered the realm of AI, exploiting the new possibilities.

They don't limit themselves to the conventional AIs that the rest of us use for legitimate purposes. Criminals have already developed a range of different AIs that can be used for their more illicit intentions.

If you take a look on the dark web, where many criminals hang out online, you'll come across AIs designed, among other things, to create malware, discover unknown and new system vulnerabilities, and assist in old-fashioned phishing/scams.

There isn't much you can do to protect yourself from these threats yet. It's disheartening news, but it simply means you need to be extra vigilant online. You should watch out for potential scam/phishing attempts, thoroughly inspect your infrastructure, and enhance security where you know it's lacking. There are almost always areas where security can be improved, so start an audit right away.

Fortunately, there is also ongoing work on completely legitimate AIs that can help combat scams/phishing and identify any security vulnerabilities you may have. This means you'll be able to get the necessary assistance to close those gaps.

These AIs come from reputable security companies such as Snyk, Check Point, and Sophos.

So, there is hope on the horizon!